This post is about something I have not discussed before, but somehow it has been present on every screenshot or video I have posted for a while now.

For more than a year I have been building a little farm of machines. They run a series of programs I wrote for the procedural generation. They work in parallel, sometimes doing the same task over different locations of the virtual world, sometimes running very different tasks one from another. Their efforts are highly coordinated. Thanks to this I can get large portions of terrain, forests and buildings generated in very little time. I can see the results of the changes I do to the world definition without having to wait too long.

What it is best, this setup allows me to throw more nodes in the network at any time. I only have three decent machines in the farm right now. They are old gaming rigs I found on Kijiji around Montreal. The minimum spec is 8 Gigs RAM, 3 or 4 cores and an ATI video card better o equal to a 4770. I need them to have GPUs because some algorithms use OpenCL. I cannot afford to get too many of them right now, but having software that scales over multiple systems is already saving me time.

What I like the most is that it really feels like a single very powerful machine. The existence of the farm is completely transparent to the application I use to design virtual worlds. I will cover this application in a future post, you have already seen many screenshots taken out of it without knowing.

I would like to introduce you to some different animals I keep in this farm and explain a little about what they do.

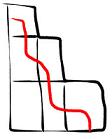

Dispatcher

The dispatcher controls everything that happens in the farm. There is a single instance of this process for the entire farm. At any time the dispatcher keeps tracks of the different jobs currently active. Each job may be at a different stage. The dispatcher knows to which farm worker should direct the next request. All the coordination happens over TCP/IP. The dispatcher listens on two different ports. One is for the farm workers to report their progress and get new work assignments. The other one is so clients of the farm can request new jobs and also query the status of ongoing jobs.

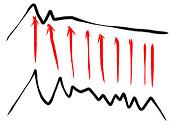

Contour

Several layers make the virtual world. Some are terrain layers, some vegetation, some are buildings and roads. All these layers have something in common, they represent a volume with an inside and an outside. Contouring is the process that allows to find the surface that divides the inside from the outside. The world is broken into many Octree cells. Each contour worker can process a cell individually. It knows which layers intersect the cell so it runs an algorithm known as Dual Contouring on the contents of the cell. The result is a very detail polygonal mesh.

Decimate

The meshes produced by the contour phase are very dense. If they were fed to the next processing stages it would slow them down. For this reason they go through a phase of decimation. This is a

fast Multi-Choice mesh optimization that preserves topology, and only removes those triangles that bring very little difference to the mesh. The resulting mesh is very close to the original, but the number of triangles is drastically reduced..

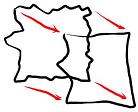

Reduce

I use a LOD system to replace several distant small cells by a larger cell. Since they are Octree cells, this means combining eight children cells into one large parent cell. Even if it covers eight times the space, the parent cell must be similar in byte-size than the child cells. This means the eight children must be brought together and compressed. The compression at this phase does change the mesh topology, otherwise it would be impossible to achieve the target sizes. Then the resulting parent cells are again combined into a larger parent cell and so on, until the highest LOD cells are obtained.

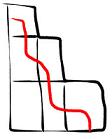

Project

This process takes a high resolution mesh from the decimate or reduce phases and creates a very simplified mesh out of it. Then it projects the excess geometry on a normal map. The results are

compressed as I described before and stored in a cell definition file. These are the files that are sent to the client for rendering. At this point the processing for a single cell is pretty much done.

I have not covered here the generation of cities, architecture, forests and other elements. They blend into this sequence and also live in the farm, but I think they deserve a dedicated post.

Probably the most interesting aspect of writing a collective of programs like this was how to make it reliable. Since I was targeting unreliable hardware to begin with, I realized failure had to be an integral part of the design. I devised a system where none of these processes expects you to do proper shutdown on them. They could just vaporize at any point. Actually I did not implement a way for them to exit gracefully. When one needs to close, the process is simply killed. The collective has to be resilient enough so no data corruption arises from such a failure.