We have this new system we are currently testing. We call it Meta Materials. What is a meta material? It is just stuff.

Let's build an abstract snowman:

We could render this using a nice snow material for the snow balls. For traditional rendering this would be a normal map and some other maps describing surface properties, like roughness, specularity, etc.

What happens if the camera get extremely close? In a real snowman you would see that there is no single clear surface. The packed snow is full of holes and imperfections. If we go close enough we may be able to see the ice crystals.

A meta material is information about stuff at multiple scales. It helps a lot when dealing with larger things than a snowman. A mountain for instance:

An artist hand-built this at the grand level. It would be too much work to manually sculpt every rock and little bump in the ground. Instead, the artist has classified what type of material goes in every spot. These are the meta materials. At a distance they may be just rendered using surface properties, however up close they can be quite large geometric features measuring 10 meters or more.

We would like a system that seamlessly covers from mountain to snowflakes, but we are taking smaller steps:

Here you can see a massive feature measuring more than 2 kilometers.

As you approach it, it comes into nice detail. Here is something that looks like a cave entrance. It is on top of the large thing. Note the rocks in the ground, these are coming from the meta-material:

I like this direction, it is a simple and robust way to define procedural objects of any shape and size. We intend to release this as an engine feature soon, I am looking forward to what people can create with a system like this. I will be posting more screenshots and videos about it.

Following one man's task of building a virtual world from the comfort of his pajamas. Discusses Procedural Terrain, Vegetation and Architecture generation. Also OpenCL, Voxels and Computer Graphics in general.

Wednesday, December 16, 2015

Friday, November 27, 2015

Global Illumination over Clipmaps

Global illumination is one of my favorite problems. It is probably the simplest most effective way to make a 3D scene come to life.

Traditional painters were taught to just "paint the light". This was centuries before 3D graphics were a thing. They understood how light bounced off surfaces picking up color on its way. They would even account for changes in the light as it went through air.

Going into realtime 3D graphics we had to forget all of this. We could not just draw the light as it was computationally too expensive. We had to concentrate on rendering subjects made of surfaces and hack the illumination any way we could. Or we could bake the stuff, which looks pretty but leaves us with a static environment. A house entirely closed should be dark, opening a single window could make quite a difference and what happens if you make a huge hole in the roof?

For sandbox games this is a problem. The game maker cannot know how deep someone will dig or if they will build a bonfire somewhere inside a building.

There are some good solutions out there to realtime global illumination, but I kept looking for something simpler that would still do the trick. In this post I will describe a method that I consider is good enough. I am not sure if this has been done before, please leave me a link if you see that is the case.

This method was somewhat of an accident. While working with occlusion I saw that determining what was visible from any point of view was a similar problem to finding out how light moves. I will try to explain it using the analogy of a circuit.

Imagine there is an invisible circuit that connects every point in space to it neighboring points. For each point we also need to know a few physical properties like how transparent it is, how it changes the light direction and color.

Why use something like that? In our case it was something we were getting almost for free from the voxel data. We saw we could not use every voxel, it resulted in very large circuits, but the good news was we could simplify this circuit pretty much the same way you collapse nodes in an octree. In fact the circuit is just a dual structure superimposed on the octree.

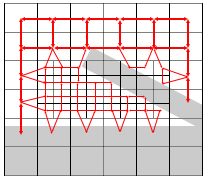

Consider the following scene:

The grey areas represent solid, white is air and the black lines is an octree (quadtree) that covers the scene at adaptive resolution.

The light circuit for this scene would be something like:

Red arrows mean connections between points where light can freely travel.

Once you have this, you could feed light into any set of points and run the node to node light transfer simulation. Each link conduces light based on its direction and the light's direction, each link also has the potential to change the light properties. It could make the light bounce, change color or be completely absorbed.

It turns out that this converges after only a few iterations. Since the octree has to be updated only when the scene change you could run the simulation many times over the same octree, for instance when the sun moves or a dragon breathes fire.

To add sunlight we can seed the top nodes like this:

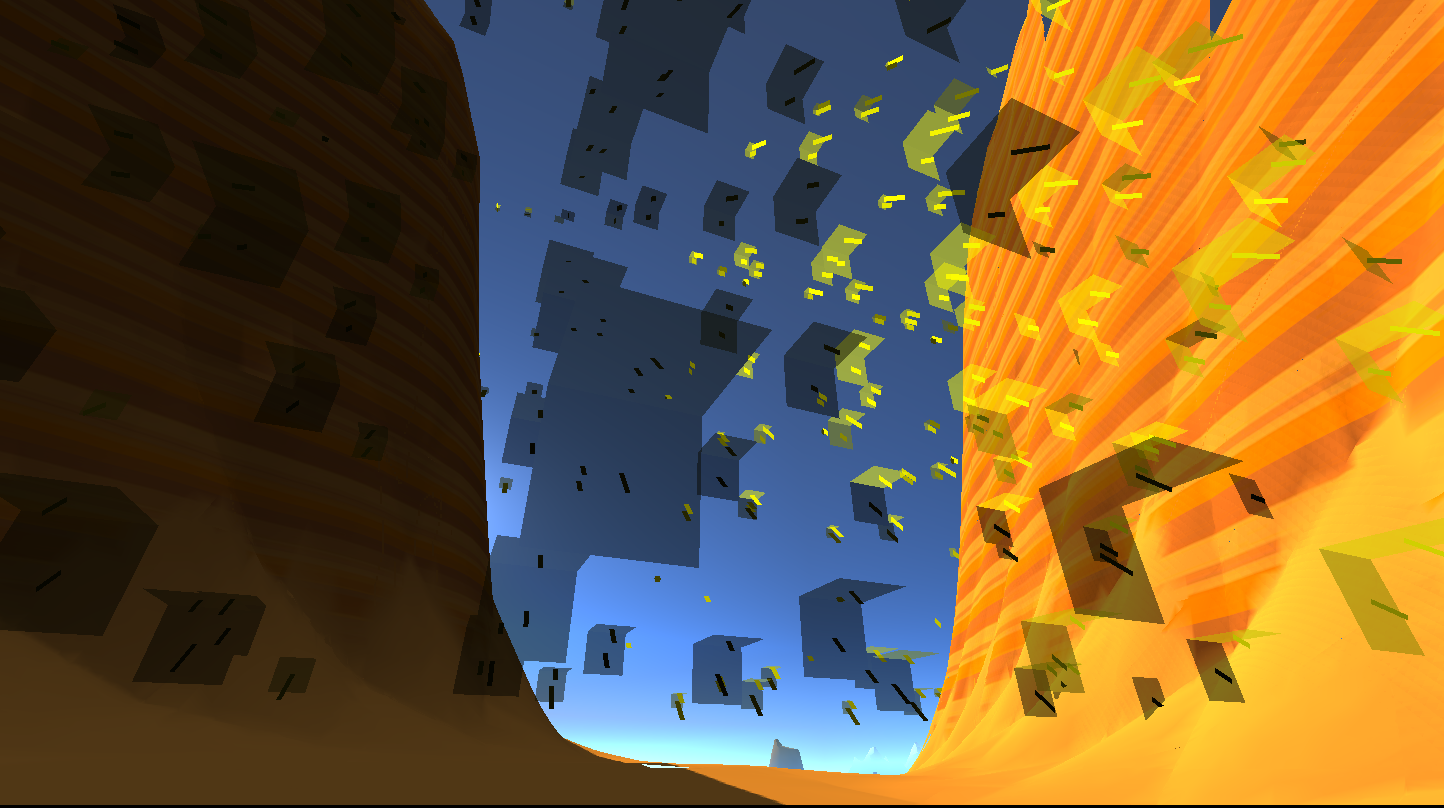

Here is how that looks after the simulation runs. This is a scene of a gorge in some sort of canyon. Sunlight has a narrow entrance:

The light nodes are rendered as two planes showing the light color and intensity.

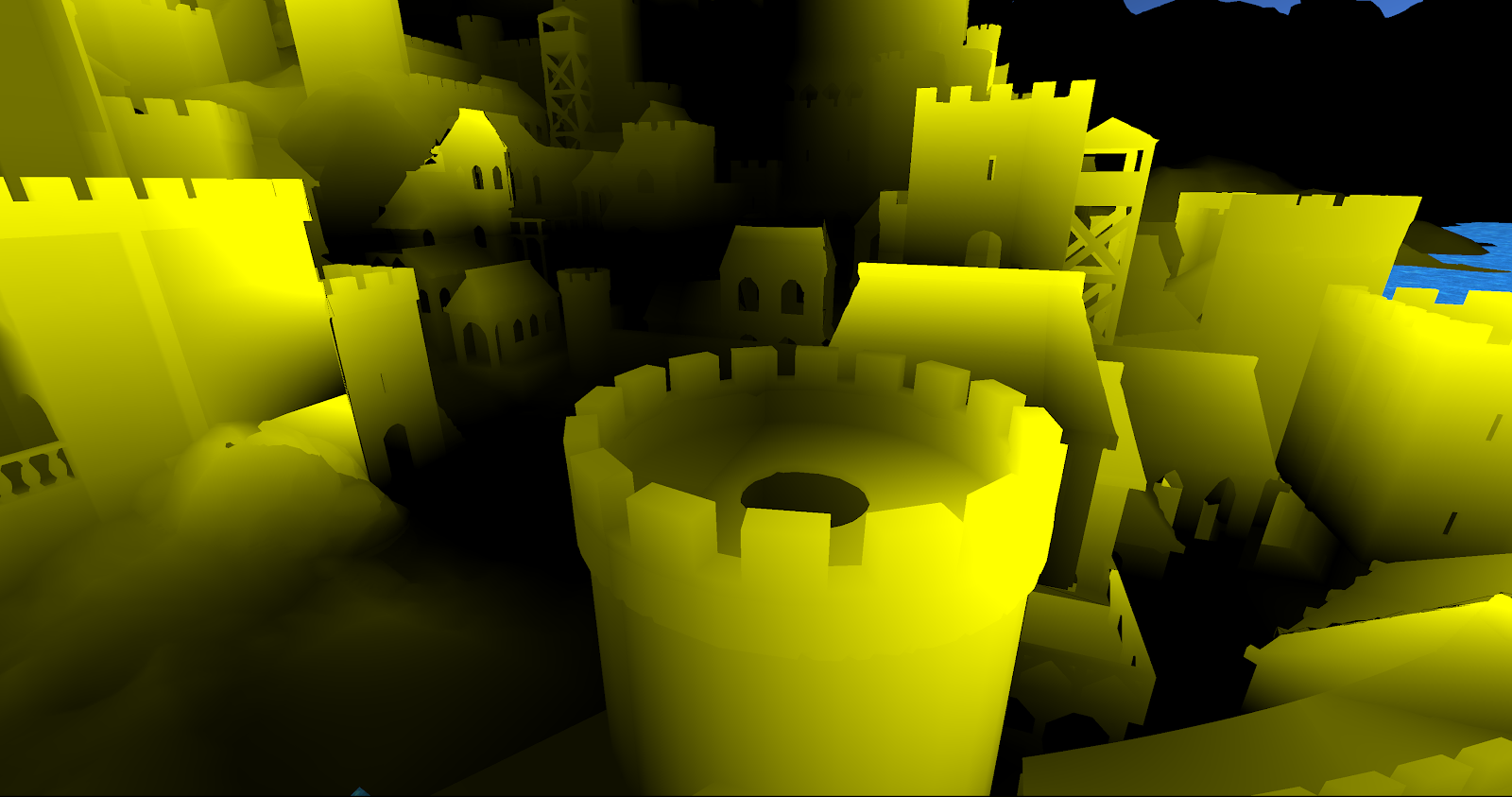

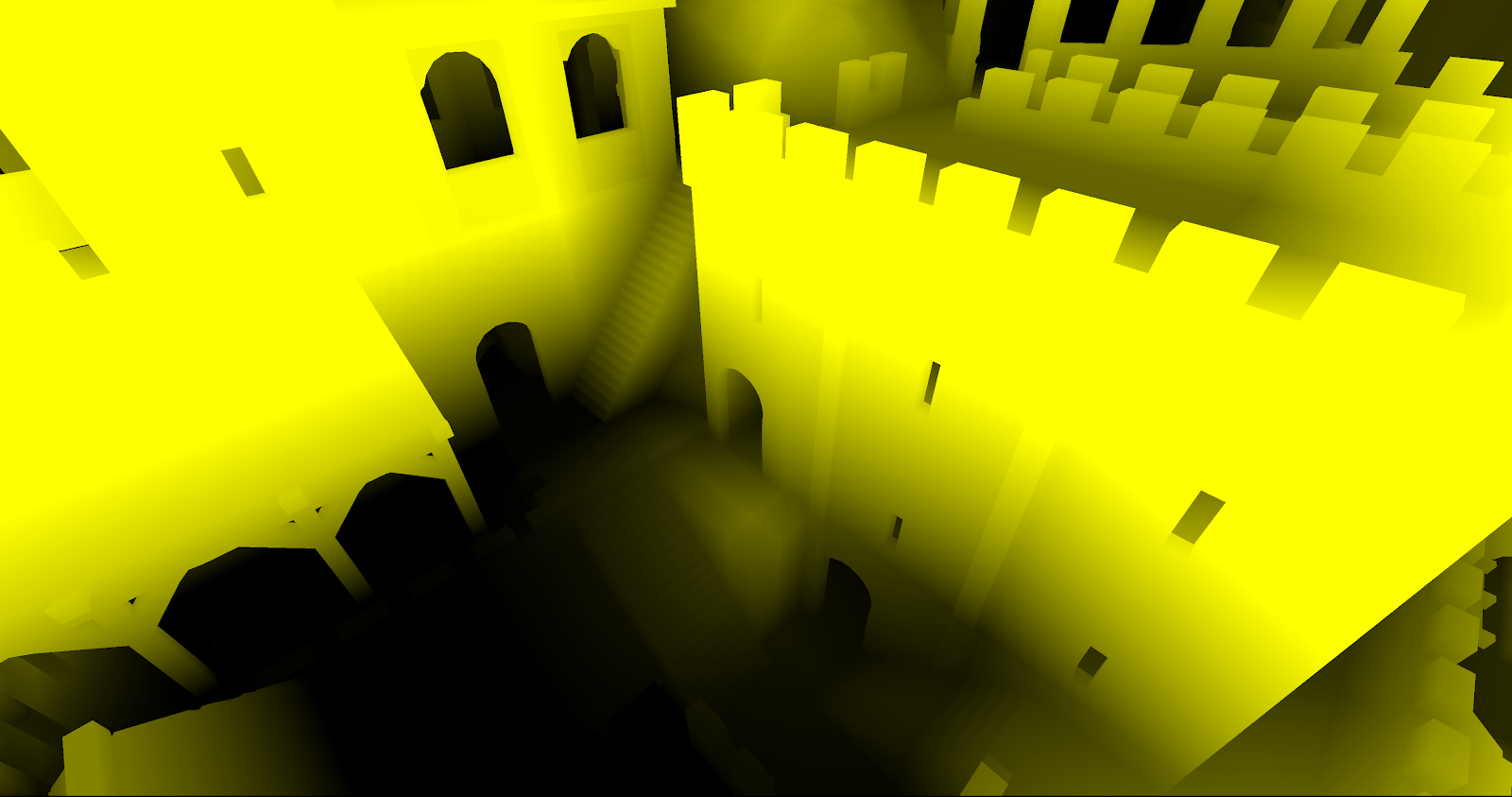

Here are other examples of feeding just sunlight to a complex scene. Yellow shows the energy picked up from the sunlight.

Taking light bounces into account is then easy. Unlike the sunlight, the bounced light is not seeded from outside, it is produced by the simulation.

In the following image you can see the results of multiple light bounces. We made the sunlight pure yellow and all the surfaces bounce pure red:

You can see how the light probes (the boxes) are picking red light from below. Here is the same setup for a different scene:

Traditional painters were taught to just "paint the light". This was centuries before 3D graphics were a thing. They understood how light bounced off surfaces picking up color on its way. They would even account for changes in the light as it went through air.

Going into realtime 3D graphics we had to forget all of this. We could not just draw the light as it was computationally too expensive. We had to concentrate on rendering subjects made of surfaces and hack the illumination any way we could. Or we could bake the stuff, which looks pretty but leaves us with a static environment. A house entirely closed should be dark, opening a single window could make quite a difference and what happens if you make a huge hole in the roof?

For sandbox games this is a problem. The game maker cannot know how deep someone will dig or if they will build a bonfire somewhere inside a building.

There are some good solutions out there to realtime global illumination, but I kept looking for something simpler that would still do the trick. In this post I will describe a method that I consider is good enough. I am not sure if this has been done before, please leave me a link if you see that is the case.

This method was somewhat of an accident. While working with occlusion I saw that determining what was visible from any point of view was a similar problem to finding out how light moves. I will try to explain it using the analogy of a circuit.

Imagine there is an invisible circuit that connects every point in space to it neighboring points. For each point we also need to know a few physical properties like how transparent it is, how it changes the light direction and color.

Why use something like that? In our case it was something we were getting almost for free from the voxel data. We saw we could not use every voxel, it resulted in very large circuits, but the good news was we could simplify this circuit pretty much the same way you collapse nodes in an octree. In fact the circuit is just a dual structure superimposed on the octree.

Consider the following scene:

The grey areas represent solid, white is air and the black lines is an octree (quadtree) that covers the scene at adaptive resolution.

The light circuit for this scene would be something like:

Red arrows mean connections between points where light can freely travel.

Once you have this, you could feed light into any set of points and run the node to node light transfer simulation. Each link conduces light based on its direction and the light's direction, each link also has the potential to change the light properties. It could make the light bounce, change color or be completely absorbed.

It turns out that this converges after only a few iterations. Since the octree has to be updated only when the scene change you could run the simulation many times over the same octree, for instance when the sun moves or a dragon breathes fire.

To add sunlight we can seed the top nodes like this:

Here is how that looks after the simulation runs. This is a scene of a gorge in some sort of canyon. Sunlight has a narrow entrance:

The light nodes are rendered as two planes showing the light color and intensity.

Here are other examples of feeding just sunlight to a complex scene. Yellow shows the energy picked up from the sunlight.

Taking light bounces into account is then easy. Unlike the sunlight, the bounced light is not seeded from outside, it is produced by the simulation.

In the following image you can see the results of multiple light bounces. We made the sunlight pure yellow and all the surfaces bounce pure red:

This is still work in progress but I like the fact it takes a fraction of a second to compute a full light solution, regardless of how complex the scene is. Soon we will be testing this in a forest setting. I miss the green light coming through the canopies from those early radiosity days.

Tuesday, September 29, 2015

All your voxel are belong to you

The same network effect that made cities preferable to the countryside was multiplied as networks have gone all electric and digital. But networks are only as important as the stuff they carry. For a digital network, that would be data.

A very interesting property of voxels to me is their simplicity. The simpler it is to produce, share and consume a data format, the better chance it has to multiply across a network.

This is not only because simplicity makes things practical and easier. I believe you can only own those things you understand. Without true ownership of your data, any hope for its democratic production or consumption is lost.

This is how we see people sharing voxel content:

The data format used here is quite simple and hopefully universal at describing 3D content. I'll get to it in a future post.

A very interesting property of voxels to me is their simplicity. The simpler it is to produce, share and consume a data format, the better chance it has to multiply across a network.

This is not only because simplicity makes things practical and easier. I believe you can only own those things you understand. Without true ownership of your data, any hope for its democratic production or consumption is lost.

This is how we see people sharing voxel content:

The data format used here is quite simple and hopefully universal at describing 3D content. I'll get to it in a future post.

Friday, September 25, 2015

Another oldie

This one takes me back, I realize I never posted a video of this demo:

I had only posted screenshots here and there. This is Voxel Farm circa 2013. Two voxel years feels an eternity.

I think it still holds pretty nicely. I appreciate the asteroids surfaces have craters and other interesting variations in them. It is not just mindless noise.

I had only posted screenshots here and there. This is Voxel Farm circa 2013. Two voxel years feels an eternity.

I think it still holds pretty nicely. I appreciate the asteroids surfaces have craters and other interesting variations in them. It is not just mindless noise.

Tuesday, September 22, 2015

A bit of recap

I will be announcing some really cool new features soon, which are keeping us super busy. It has been all about giant turtles, photons... quite unreal if you ask me.

So while we wait here are a couple of things you may have missed.

First, did you know we have made all engine documentation public? You can find it here:

http://docs.voxelfarm.com

The reference for the entire C++ library is covered in the programmer's section. The actual code is not there (sorry, we still require to license) but you can see all the headers, classes, etc. It may give you a good idea of how the whole thing works and is built.

Also here is an oldie we never got to publish. It shows how a single tool, the selection box, can be used to perform many different tasks.

My favorite bit is around the 5 minute mark, where the Cut&Paste is used to reshape an existing arcade. That is a good example of emergent possibilities coming out of very simple mechanics.

Also we got two team members to share their work sessions over twitch. It is mostly Voxel Studio but also how the assets that go into a Voxel Farm project are created. They stream most days:

http://www.twitch.tv/voxelfarmgabriel

http://www.twitch.tv/voxelfarmociel

Feel free to take a look. Be nice, they are both very gentle creatures.

So while we wait here are a couple of things you may have missed.

First, did you know we have made all engine documentation public? You can find it here:

http://docs.voxelfarm.com

The reference for the entire C++ library is covered in the programmer's section. The actual code is not there (sorry, we still require to license) but you can see all the headers, classes, etc. It may give you a good idea of how the whole thing works and is built.

Also here is an oldie we never got to publish. It shows how a single tool, the selection box, can be used to perform many different tasks.

My favorite bit is around the 5 minute mark, where the Cut&Paste is used to reshape an existing arcade. That is a good example of emergent possibilities coming out of very simple mechanics.

Also we got two team members to share their work sessions over twitch. It is mostly Voxel Studio but also how the assets that go into a Voxel Farm project are created. They stream most days:

http://www.twitch.tv/voxelfarmgabriel

http://www.twitch.tv/voxelfarmociel

Feel free to take a look. Be nice, they are both very gentle creatures.

Thursday, August 27, 2015

Voxel Occlusion

Real time rendering systems (like the ones in game engines) have two big problems to solve: First to determine what is visible from the camera's point of view, then to render what is visible.

While rendering is now a soft problem, finding out what is potentially visible remains difficult. There is a long history of hackery in this topic: BSP trees, PVS, portals etc. (The acronyms in this case make it sound simpler.) These approaches perform well for some cases to then fail miserably in other cases. What works for indoors breaks in large open spaces. To make it worse, these visibility structures take long to build. For an application where the content constantly changes, they are a very poor choice or not practical at all.

Occlusion testing on the other hand is a dynamic approach to visibility. The idea is simple: using a simplified model of the scene we can predict when some portions of the scene become eclipsed by another parts of the scene.

The challenge is how to do it very quickly. If the test is not fast enough, it could still be faster to render everything than to test and then render the visible parts. It is necessary to find out simplified models of the scene geometry. Naturally these simple, approximated models must cover as much of the original content as possible.

Voxels and clipmap scenes make it very easy to perform occlusion tests. I wrote about this before: Covering the Sun with a finger.

We just finished a new improved version of this system, and we were ecstatic to see how good the occluder coverage turned out to be. In this post I will show how it can be done.

Before anything else, here is a video of the new occluder system in action:

A Voxel Farm scene is broken down into chunks. For each chunk the system computes several quads (a four vertex polygon) that are fully inscribed in the solid section of a chunk. They also are as large as possible. A very simple example is shown here, where a horizontal platform produces a series of horizontal quads:

These quads do not need to be axis aligned. As long as they remain inside the solid parts of the cell, they could go in any direction. The following image shows occluder quads going at different angles:

Here is how it works:

Each chunk is generated or loaded as a voxel buffer. You can imagine this as a 3D matrix, where each element is a voxel.

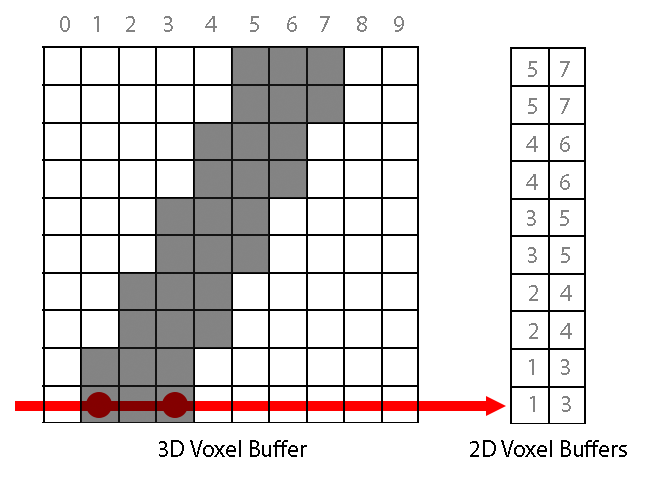

The voxel buffer is scanned along each main axis. The following images depict the process of scanning along one direction. Below there is a representation of the 3D buffer as a slice. If this was a top down view, you can imagine this is a vertical wall going at an angle:

For each direction, two 2D voxel buffers are computed. One stores where the ray enters the solid and the second where the ray exits the solid.

For each 2D buffer, the maximum solid rectangle is computed. A candidate rectangle can grow if the neighboring point in the buffer is also solid and its depth value does not differ more than a given threshold.

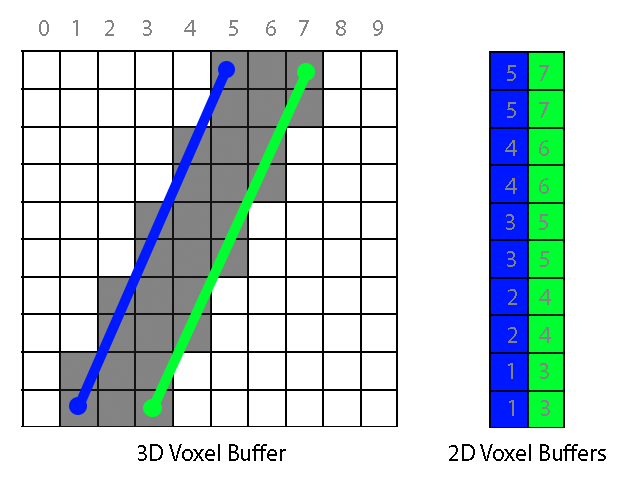

Each buffer can produce one quad, showing in blue and green in the following image:

In fact, if we run again the function that finds the maximum rectangle on the second 2D buffer it will return another quad, this time covering the missing piece :

It is also possible not to use our rasterizer test at all and feed the quads to a system like Umbra. What really matters is not how the test is performed, but how good and simple the occluder quads are.

While this can still be improved, I'm very happy with this system. It is probably the best optimization we have ever done.

While rendering is now a soft problem, finding out what is potentially visible remains difficult. There is a long history of hackery in this topic: BSP trees, PVS, portals etc. (The acronyms in this case make it sound simpler.) These approaches perform well for some cases to then fail miserably in other cases. What works for indoors breaks in large open spaces. To make it worse, these visibility structures take long to build. For an application where the content constantly changes, they are a very poor choice or not practical at all.

Occlusion testing on the other hand is a dynamic approach to visibility. The idea is simple: using a simplified model of the scene we can predict when some portions of the scene become eclipsed by another parts of the scene.

The challenge is how to do it very quickly. If the test is not fast enough, it could still be faster to render everything than to test and then render the visible parts. It is necessary to find out simplified models of the scene geometry. Naturally these simple, approximated models must cover as much of the original content as possible.

Voxels and clipmap scenes make it very easy to perform occlusion tests. I wrote about this before: Covering the Sun with a finger.

We just finished a new improved version of this system, and we were ecstatic to see how good the occluder coverage turned out to be. In this post I will show how it can be done.

Before anything else, here is a video of the new occluder system in action:

A Voxel Farm scene is broken down into chunks. For each chunk the system computes several quads (a four vertex polygon) that are fully inscribed in the solid section of a chunk. They also are as large as possible. A very simple example is shown here, where a horizontal platform produces a series of horizontal quads:

Here is how it works:

Each chunk is generated or loaded as a voxel buffer. You can imagine this as a 3D matrix, where each element is a voxel.

The voxel buffer is scanned along each main axis. The following images depict the process of scanning along one direction. Below there is a representation of the 3D buffer as a slice. If this was a top down view, you can imagine this is a vertical wall going at an angle:

For each direction, two 2D voxel buffers are computed. One stores where the ray enters the solid and the second where the ray exits the solid.

For each 2D buffer, the maximum solid rectangle is computed. A candidate rectangle can grow if the neighboring point in the buffer is also solid and its depth value does not differ more than a given threshold.

Each buffer can produce one quad, showing in blue and green in the following image:

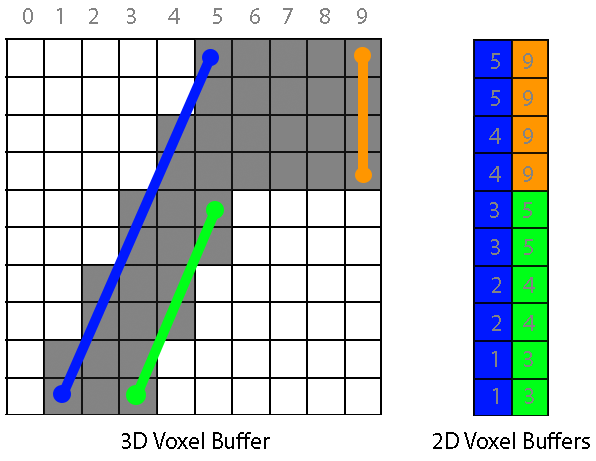

Here is another example where a jump in depth (5 to 9) makes the green occluder much smaller:

In fact, if we run again the function that finds the maximum rectangle on the second 2D buffer it will return another quad, this time covering the missing piece :

Once we have the occluders for all chunks in a scene, we can test very quickly whether a given chunk in the scene is hidden behind other chunks. Our engine does this using a software rasterizer, which renders the occluder quads to a depth buffer. This buffer can be used to test all chunks in the scene. If a chunk's area on screen is covered in the depth buffer by a closer depth, it means the chunk is not visible.

This depth buffer can be very low resolution. We currently use a 64x64 buffer to make sure the software rasterization is fast. Here you can see how the buffer looks like:

While this can still be improved, I'm very happy with this system. It is probably the best optimization we have ever done.

Wednesday, August 12, 2015

Texture Grain

So far we have been using triplanar mapping for texturing voxels. This is good enough for terrain but does not look right every time for man-made structures. With triplanar mapping, textures are always aligned to the universal coordinate planes. If you are building features at an angle, the texture orientation ignores that and continues to follow the universal grid. That has been a thorny problem for all voxel creators and enthusiasts out there.

It does not have to be like that anymore. Take a look at this previously impossible structure:

It does not have to be like that anymore. Take a look at this previously impossible structure:

It is two wooden beams going at an angle. The texture grain properly follows the line direction.

It is not only about lines, this can be applied to any tool. In the following video you can see how curves also preserve the texture grain:

This new ability is a nice side effect of including UV mapping information in voxels. This is no different than the zebras and leopards we stamped into the world in earlier videos, in this case the tool comes up with the UV mapping information on-the-fly.

UV mapped voxels are not yet released in Voxel Farm, it is just now we got to work on the tools so they take full advantage of them, but it really looks to me this will take our engine to a whole new level.

Saturday, July 4, 2015

Export your creations

We just completed a new iteration on the FBX export feature. This new version is able to bake textures along with the geometry. Check it out in this video:

The feature seems rather simple to the user, however there are massive levels of trickery going on under the hood.

When you look at a Voxel Farm scene a lot of what you see is computed in realtime. The texturing of individual pixels happens in the GPU where the different attributes that make each voxel material are evaluated on the fly. If you are exporting to a static medium, like an FBX file, you cannot have any dynamic elements computed on the fly. We had no choice but to bake a texture for each mesh fragment.

The first step is to unwrap the geometry and make it flat so it fits a square 2D surface. Here we compute UV coordinates for each triangle. The challenge is how to fit all triangles in a square while minimizing wasted space and any sort of texture distortion.

Here is an example of how a terrain chunk is unwrapped into a collection of triangles carefully packed into a square:

The image also shows that different texture channels like diffuse and normal can then be written into the final images. That is the second and last step, but there is an interesting twist here.

Since we are creating a texture for the mesh anyway, it would be a good opportunity to include features not present in the geometry at the current level of detail. For instance, consider these tree stumps that are rendered using geometry at the highest level of detail:

Each subsequent level of detail will have less resolution. If we go ahead five levels, the geometric resolution won't be fine enough for these stumps to register. Wherever there was a stump, we may get now just a section of a much larger triangle.

Now, for the FBX export we are allocating unique texture space for these triangles. At this level of detail the texture resolution may still be enough for the stumps to register. So instead of evaluating the texture for the low resolution geometry, we project a higher resolution model of the same space into the low detail geometry. Here you can see the results:

Note how a single triangle can contain the projected image of a tree stump.

This process is still very CPU intensive as we need to compute higher resolution versions for the low resolution cells. This iteration was mostly about getting the feature working and available for users. We will be optimizing this in the near future.

The algorithms used here are included in the SDK and engine source code. This sort of technique is called "Detail Transfer" or "Detail Recovery". It is also the cornerstone for a much better looking LOD system, as very rich voxel/procedural content can be captured and projected on top of fairly simple geometry.

The feature seems rather simple to the user, however there are massive levels of trickery going on under the hood.

When you look at a Voxel Farm scene a lot of what you see is computed in realtime. The texturing of individual pixels happens in the GPU where the different attributes that make each voxel material are evaluated on the fly. If you are exporting to a static medium, like an FBX file, you cannot have any dynamic elements computed on the fly. We had no choice but to bake a texture for each mesh fragment.

The first step is to unwrap the geometry and make it flat so it fits a square 2D surface. Here we compute UV coordinates for each triangle. The challenge is how to fit all triangles in a square while minimizing wasted space and any sort of texture distortion.

Here is an example of how a terrain chunk is unwrapped into a collection of triangles carefully packed into a square:

The image also shows that different texture channels like diffuse and normal can then be written into the final images. That is the second and last step, but there is an interesting twist here.

Since we are creating a texture for the mesh anyway, it would be a good opportunity to include features not present in the geometry at the current level of detail. For instance, consider these tree stumps that are rendered using geometry at the highest level of detail:

Each subsequent level of detail will have less resolution. If we go ahead five levels, the geometric resolution won't be fine enough for these stumps to register. Wherever there was a stump, we may get now just a section of a much larger triangle.

Now, for the FBX export we are allocating unique texture space for these triangles. At this level of detail the texture resolution may still be enough for the stumps to register. So instead of evaluating the texture for the low resolution geometry, we project a higher resolution model of the same space into the low detail geometry. Here you can see the results:

Note how a single triangle can contain the projected image of a tree stump.

This process is still very CPU intensive as we need to compute higher resolution versions for the low resolution cells. This iteration was mostly about getting the feature working and available for users. We will be optimizing this in the near future.

The algorithms used here are included in the SDK and engine source code. This sort of technique is called "Detail Transfer" or "Detail Recovery". It is also the cornerstone for a much better looking LOD system, as very rich voxel/procedural content can be captured and projected on top of fairly simple geometry.

Friday, June 26, 2015

Castle by the lake

Here is a castle made using our default castle grammar set. This was capture from a new version of the Voxel Studio renderer we will be rolling out soon. The rendering is still work in progress (the texture LOD transitions are not blended at all, the material scale is off, etc.) so some imagination on your side is required. Also the castle is partially completed as we intend to fill the little island.

There are no mesh props here, everything on screen comes from voxels. Also there are no handmade voxel edits, this is strictly the output of grammars. Also note that the same few grammars are applied over and over. For instance curved walls are created with the same wall grammar used for the straight walls, they just run in a curved scope.

This is a scene we are creating to test some new cool systems we are developing to handle high density content.

There are no mesh props here, everything on screen comes from voxels. Also there are no handmade voxel edits, this is strictly the output of grammars. Also note that the same few grammars are applied over and over. For instance curved walls are created with the same wall grammar used for the straight walls, they just run in a curved scope.

This is a scene we are creating to test some new cool systems we are developing to handle high density content.

Sunday, May 31, 2015

Evolution of Procedural

This post is mostly about how I feel about procedural generation and where we will be going next.

A couple of months ago we released Voxel Farm and Voxel Studio. Many of you have already played with it and often we get the same questions: why so much focus on artist input, what happened to building entire worlds with the click of a button?

The short answer is "classic" real time procedural generation is bad and should be avoided, but if you stop reading here you will get the wrong idea, so I really encourage you to get to my final point.

While you can customize our engine to produce any procedural object you may think of, our current out-of the-box components favor artist generated content. This is how our current workflow looks like:

A couple of months ago we released Voxel Farm and Voxel Studio. Many of you have already played with it and often we get the same questions: why so much focus on artist input, what happened to building entire worlds with the click of a button?

The short answer is "classic" real time procedural generation is bad and should be avoided, but if you stop reading here you will get the wrong idea, so I really encourage you to get to my final point.

While you can customize our engine to produce any procedural object you may think of, our current out-of the-box components favor artist generated content. This is how our current workflow looks like:

It can be read like this: The world is the result of multiple user-supplied data layers which are shuffled together. Variety is introduced at the mixer level, so there is a significant procedural aspect to this, but at the same time the final output is limited to combinations of the samples provided by a human as input files.

This approach is fast enough to allow real time generation, and at the same time it can produce interesting and varied enough results to keep humans interested for a while. The output can be incredibly good, in fact as good as the talent of the human who created the input files. But here is also the problem. You still need to provide reasonably good input to the system.

This is the first piece of bad news. Procedural generation is not a replacement for talent. If you lack artistic talent, procedural generation is not likely to help much. You can amplify an initial set into a much larger set, but you can't turn a bad set into a good one. A microphone won't make you a good singer.

The second batch of bad news is the one you should worry about: Procedural generation has a cost. I have posted about this before. You cannot make something out of nothing. Entropy matters. It takes serious effort for a team of human creators to come up with interesting scenes. In the same way, you must pay a similar price (in energy or time) when you synthesize something from scratch. As a rule of thumb, the complexity of the system you can generate is proportional to the amount of energy you spend. If you think you can generate entire planets on-the-fly on a console or PC, you are up for some serious disappointment.

I will be very specific about this. Procedural content based on local mathematical functions like Perlin, Voronoi, etc. cannot hold our interest and it is guaranteed to produce boring content. Procedural content based on simulation and AI can rival human nature and what humans create, but it is not fast enough for real time generation.

Is there any point in pursuing real time procedural generation? Absolutely, but you have to take it for what it is. As I see it, the only available path at this point is to have large sets of interesting content that can be used to produce even larger sets, but there are limits for how much you can grow the content before it becomes predictable.

For real time generation, our goal will be to help people produce better base content sets. These could be produced on-the-fly, assuming the application allows some time and energy for it.

Here is where we are going next:

Hopefully that explains why we chose to start with a system that can closely obey the instructions of an artist. Our goal is to replace human work by automating the artist, not by automating the subject. We have not taken a detour, I believe this is the only way.

Wednesday, May 13, 2015

Toon Time

We are often asked if Voxel Farm can do a cartoony look. As a side project I decided to give it a try. This is what I managed to do in a couple of nights:

I had been recently exposed to Adventure Time by my kids, the influences are clear. This programmer's art so go easy on me. Also there is very little detail. All materials are solid colors. Only two instanced objects (a tree and a stump) but I think it works for the most part.

You can read a guide on how this was built here. It served as an example of how to create a biome from scratch in Voxel Studio. We will probably expand this project to cover other topics like voxel instances and runtime extensions.

I had been recently exposed to Adventure Time by my kids, the influences are clear. This programmer's art so go easy on me. Also there is very little detail. All materials are solid colors. Only two instanced objects (a tree and a stump) but I think it works for the most part.

You can read a guide on how this was built here. It served as an example of how to create a biome from scratch in Voxel Studio. We will probably expand this project to cover other topics like voxel instances and runtime extensions.

Wednesday, April 15, 2015

Unity 5 Update

Earlier this week we rolled out a rather large update to the Unity plugin. The update went mostly into improving the workflow between Voxel Farm and Unity. You can get an idea of how it feels from the following video:

My favorite feature so far is now we get to see all the voxel geometry while in Unity's Scene mode. This makes possible to place your traditional Unity objects (lights, geometry, characters, etc.) in precise reference with the voxel content.

Another key feature was we now have working physics inside Unity. You can tell this is new because the video spends a good deal of time just cutting chunks out of buildings to watch them fall.

The shading has improved as well. Although this is an area I hope adopters of the engine and SDK will expand by producing all sorts of cool new looks. The realistic rendering included by default in the plugin is an example of what can be done and how to approach rendering in general.

The plugin is still Windows only. That is probably the second most frequent request/question we get. This is because our code is unmanaged C++. I wish there was a simple way around this. At this point we are contemplating re-writing parts of the engine in C#, which will take precious time we could be spending on new features.

My favorite feature so far is now we get to see all the voxel geometry while in Unity's Scene mode. This makes possible to place your traditional Unity objects (lights, geometry, characters, etc.) in precise reference with the voxel content.

Another key feature was we now have working physics inside Unity. You can tell this is new because the video spends a good deal of time just cutting chunks out of buildings to watch them fall.

The shading has improved as well. Although this is an area I hope adopters of the engine and SDK will expand by producing all sorts of cool new looks. The realistic rendering included by default in the plugin is an example of what can be done and how to approach rendering in general.

The plugin is still Windows only. That is probably the second most frequent request/question we get. This is because our code is unmanaged C++. I wish there was a simple way around this. At this point we are contemplating re-writing parts of the engine in C#, which will take precious time we could be spending on new features.

Sunday, March 22, 2015

UV-mapped voxels

For a while now the team has been working on a new aspect of our voxel pipeline. The idea is simple: we wanted to allow custom made, detailed texturing for voxel worlds.

In traditional polygon-based worlds, a lot of the detail you see has been captured in textures. The same textures are reused many times over the same scene. This brings a lot of additional detail at little memory cost. Each texture needs to be stored only once.

Let's say you have a column that appears many times over in a scene. You would create a triangle mesh for the column with enough triangles to capture just the silhouette and then use textures to capture everything else. A process called UV-mapping links each triangle in the mesh to a section of the texture.

This is how virtual worlds and games get to look their best. If we had to represent everything as geometry it would be too much information even for top-of-the-line systems. If given the choice, nobody would use UV-mapping, but there is really no choice.

With voxels you have the same problem. If you want to capture every detail using voxels you would need too many of them. This may be possible in a near future, maybe for different hardware generations and architectures, but I would not bet on voxels becoming smaller than pixels anytime soon.

The beauty of voxels is they can encode anything you want. We saw it would be possible to keep voxel resolutions low and still bring a lot of detail into scene if we encoded UV mapping into voxels, just like vertices do for traditional polygon systems.

You can see some very early results in this video:

Here we are passing only 8bits for UV, also there are still some issues to address with the voxelization. I hope you can see beyond these artifacts.

Luckily we need to store UV only for those voxels in the outside, so the data is manageable. For procedural objects, voxels could also use UV. The rocks we instance over terrain could be using detailed textures instead of triplanar mapping. Same for trees and even man-made elements like buildings and ruins. For procedural voxels the UV adds little overhead since nothing has to be stored anyway.

Use of UV is optional. The engine is able to merge UV-mapped voxels with triplanar-mapped voxels on the fly. You can carve pieces out of these models, or merge them with procedural voxels and still have one single watertight mesh:

As you can see the leopard's legs do not go underground in the rendered mesh. Everything connects properly.

This is an earlier video I think never linked before:

Why go over all this trouble? UV-mapping took polygonal models to a whole new level of visual quality and performance. We are going through the same phase now.

This kind of encoding we have done for UV also opens the doors to new interesting applications, like animation. If you think about it, animation is not different from UV-mapping. Instead of mapping vertices to a texture we map vertices to bones, but it is pretty much the same. So, yes, that zebra could move one day.

In traditional polygon-based worlds, a lot of the detail you see has been captured in textures. The same textures are reused many times over the same scene. This brings a lot of additional detail at little memory cost. Each texture needs to be stored only once.

Let's say you have a column that appears many times over in a scene. You would create a triangle mesh for the column with enough triangles to capture just the silhouette and then use textures to capture everything else. A process called UV-mapping links each triangle in the mesh to a section of the texture.

This is how virtual worlds and games get to look their best. If we had to represent everything as geometry it would be too much information even for top-of-the-line systems. If given the choice, nobody would use UV-mapping, but there is really no choice.

With voxels you have the same problem. If you want to capture every detail using voxels you would need too many of them. This may be possible in a near future, maybe for different hardware generations and architectures, but I would not bet on voxels becoming smaller than pixels anytime soon.

The beauty of voxels is they can encode anything you want. We saw it would be possible to keep voxel resolutions low and still bring a lot of detail into scene if we encoded UV mapping into voxels, just like vertices do for traditional polygon systems.

You can see some very early results in this video:

Luckily we need to store UV only for those voxels in the outside, so the data is manageable. For procedural objects, voxels could also use UV. The rocks we instance over terrain could be using detailed textures instead of triplanar mapping. Same for trees and even man-made elements like buildings and ruins. For procedural voxels the UV adds little overhead since nothing has to be stored anyway.

Use of UV is optional. The engine is able to merge UV-mapped voxels with triplanar-mapped voxels on the fly. You can carve pieces out of these models, or merge them with procedural voxels and still have one single watertight mesh:

As you can see the leopard's legs do not go underground in the rendered mesh. Everything connects properly.

This is an earlier video I think never linked before:

Why go over all this trouble? UV-mapping took polygonal models to a whole new level of visual quality and performance. We are going through the same phase now.

This kind of encoding we have done for UV also opens the doors to new interesting applications, like animation. If you think about it, animation is not different from UV-mapping. Instead of mapping vertices to a texture we map vertices to bones, but it is pretty much the same. So, yes, that zebra could move one day.

Tuesday, March 10, 2015

Get Voxel Farm!

Almost a week ago we announced new licensing plans for Voxel Farm. We have gone a long way since I asked you the readers what would you like to come out of this project. It is hard to believe three years have passed.

So you have three different Voxel Farm flavors to pick from: Creator, Indie and Pro. But before adding anything else, a word of caution: There are rough edges. The documentation lacks coverage for many of the things you can already do with the engine.

We are committed to this project for the long run, and we will be providing constant updates. We want to deliver rather than make promises or hype new features. I do not see this release as early access, or as request for the community to crowd-fund us. This software is released as-is.

In the proper hands there is a lot that can be achieved. There is no question you can use Voxel Farm today to make your next-gen killer game or app. Also, if you run into trouble you can always contact us and we will do our best to get you back on track.

The INDIE license gets you access to our SDK and the Voxel Studio creation tool. Here the engine core remains in binary form. These are C++ libs you can link into your project. The SDK contains a dozen of different examples, including full source code for a Unity plugin. Very important: the Unity plugin uses a DLL, so your game will be Windows only. Also you will need to develop on a Windows machine. There is a one time fee of $295 USD, and a recurring fee of $19 USD per month. When your project has grossed more than $100,000 USD, a 5% royalty fee is applied. While your subscription is active, you will get free updates perpetually to the SDK, example code and tools.

The PRO license gets you full source code, plus the SDK, plus Voxel Studio. There is a one time fee of $995 USD and then a recurring monthly fee of $95 USD. If your project makes more than $100,000 USD, a 2% royalty fee applies. There is an important twist here: The PRO license is organization-wide. It means your company only needs to purchase one license and it can be re-used by many different individuals within the organization. This also applies to the Voxel Studio tool. The INDIE license, on the other hand, is for just one individual.

And there is the CREATOR license. Here you get the Voxel Studio tool for $19 USD a year. Voxel Studio allows creating new worlds from scratch, including the different materials available for procedural generation and voxel editing. It also has fully functional L-System and grammar editing. The current version has limited exporting capabilities, so at the time your creations may be locked inside Voxel Studio, or you may be limited to collaborating with people and groups who have licensed INDIE or PRO. Whatever you create using this tool belongs to you. This is pretty much a content creation tool like Photoshop or Maya.

You can check out the license agreements in our online documentation.

As usual let me know what you think. There is a lot of hard work into this project, the team has done great. I also want to thank all of you who stopped by at our booth at GDC. It was great meeting you all and sharing our vision of the future with you.

So you have three different Voxel Farm flavors to pick from: Creator, Indie and Pro. But before adding anything else, a word of caution: There are rough edges. The documentation lacks coverage for many of the things you can already do with the engine.

We are committed to this project for the long run, and we will be providing constant updates. We want to deliver rather than make promises or hype new features. I do not see this release as early access, or as request for the community to crowd-fund us. This software is released as-is.

In the proper hands there is a lot that can be achieved. There is no question you can use Voxel Farm today to make your next-gen killer game or app. Also, if you run into trouble you can always contact us and we will do our best to get you back on track.

The INDIE license gets you access to our SDK and the Voxel Studio creation tool. Here the engine core remains in binary form. These are C++ libs you can link into your project. The SDK contains a dozen of different examples, including full source code for a Unity plugin. Very important: the Unity plugin uses a DLL, so your game will be Windows only. Also you will need to develop on a Windows machine. There is a one time fee of $295 USD, and a recurring fee of $19 USD per month. When your project has grossed more than $100,000 USD, a 5% royalty fee is applied. While your subscription is active, you will get free updates perpetually to the SDK, example code and tools.

The PRO license gets you full source code, plus the SDK, plus Voxel Studio. There is a one time fee of $995 USD and then a recurring monthly fee of $95 USD. If your project makes more than $100,000 USD, a 2% royalty fee applies. There is an important twist here: The PRO license is organization-wide. It means your company only needs to purchase one license and it can be re-used by many different individuals within the organization. This also applies to the Voxel Studio tool. The INDIE license, on the other hand, is for just one individual.

And there is the CREATOR license. Here you get the Voxel Studio tool for $19 USD a year. Voxel Studio allows creating new worlds from scratch, including the different materials available for procedural generation and voxel editing. It also has fully functional L-System and grammar editing. The current version has limited exporting capabilities, so at the time your creations may be locked inside Voxel Studio, or you may be limited to collaborating with people and groups who have licensed INDIE or PRO. Whatever you create using this tool belongs to you. This is pretty much a content creation tool like Photoshop or Maya.

You can check out the license agreements in our online documentation.

As usual let me know what you think. There is a lot of hard work into this project, the team has done great. I also want to thank all of you who stopped by at our booth at GDC. It was great meeting you all and sharing our vision of the future with you.

(Photo: Michael and Rados at our booth at GDC 2015)

Saturday, February 21, 2015

The Farm in Voxel Farm

In the past months we have been working on a very cool system. I will describe it briefly for now, but I would really like to hear your opinions on this. We call it "The Farm".

The idea is simple: we are building a cloud service where everyone can contribute to any voxel world or scene they like. Whatever you contribute is remembered, but it is also kept on your own layer. Whatever you do, you cannot overwrite other people's content. And it is really up to others to decide whether they see your work.

When they do decide to see your work, your contributions to the scenery will be merged in real time. This is one of the key advantages of using voxels. So it will look as if everything was created as a hole, in reality what you see is the sum of different content streams which remain isolated from each other at all times.

This is pretty much like Twitter (or Facebook). You can tweet anything you want, if you have no followers, nobody will really see it. Like Twitter, this is real time. If someone is following you and you change a part of a world they happen to be looking at, they will see the changes in right away.

What I find powerful about this idea is creative groups can be assembled ad-hoc. If you and a few friends want to create together, there is no need to setup servers or anything like that. People in the group just need to follow each other.

If someone in the group goes rogue, it only takes people to un-follow that person to make all of his/her changes disappear.

We want your grandma using this so we have made it very simple for people to participate. You just need to say you want to use the Farm service for a particular project (a world) and you are set:

This system is also similar to source control systems like GitHub. At some point someone may decide to merge all contributions from a team of builders into a single "stream". Anyone following that stream will get the changes then. A project manager (or a Guild leader) can decide which team member streams to merge, which ones to reject.

We are using a revolutionary server-side architecture to achieve this based on Dynamo/Reactor patterns. We can guarantee very low latency by design, also to remember all your data, but this will likely require a small monthly fee from everyone involved. Commitment to this level of service has really high requirements.

That's it: Everyone participates, everyone decides what to see and it is very simple to get in.

Oh, and very important: You data is always yours.

The idea is simple: we are building a cloud service where everyone can contribute to any voxel world or scene they like. Whatever you contribute is remembered, but it is also kept on your own layer. Whatever you do, you cannot overwrite other people's content. And it is really up to others to decide whether they see your work.

When they do decide to see your work, your contributions to the scenery will be merged in real time. This is one of the key advantages of using voxels. So it will look as if everything was created as a hole, in reality what you see is the sum of different content streams which remain isolated from each other at all times.

This is pretty much like Twitter (or Facebook). You can tweet anything you want, if you have no followers, nobody will really see it. Like Twitter, this is real time. If someone is following you and you change a part of a world they happen to be looking at, they will see the changes in right away.

What I find powerful about this idea is creative groups can be assembled ad-hoc. If you and a few friends want to create together, there is no need to setup servers or anything like that. People in the group just need to follow each other.

If someone in the group goes rogue, it only takes people to un-follow that person to make all of his/her changes disappear.

We want your grandma using this so we have made it very simple for people to participate. You just need to say you want to use the Farm service for a particular project (a world) and you are set:

This system is also similar to source control systems like GitHub. At some point someone may decide to merge all contributions from a team of builders into a single "stream". Anyone following that stream will get the changes then. A project manager (or a Guild leader) can decide which team member streams to merge, which ones to reject.

We are using a revolutionary server-side architecture to achieve this based on Dynamo/Reactor patterns. We can guarantee very low latency by design, also to remember all your data, but this will likely require a small monthly fee from everyone involved. Commitment to this level of service has really high requirements.

That's it: Everyone participates, everyone decides what to see and it is very simple to get in.

Oh, and very important: You data is always yours.

Monday, January 5, 2015

Selection Twist

If you played SOE's Landmark during alpha you will remember the first iteration of the Line tool. It had some serious issues as soon as you started shifting the start and end position. We realized then that we needed a more organic approach to the line volumes. We were using flat planes to define the line volume and they were not able to transition smoothly enough from one end to the next.

In order to fix that, we chose to tackle on a bigger feature that had the line tool as a special case. This is usually a bad engineering practice, you just don't make a problem bigger to take care of a smaller one, but it turned out right. The line tool with disjoint ends was a special case of a mesh under a free-form deformation volume (FFD), so we did just that.

As you can see in the previous image, the sides of the line volume gradually curve from the start position to the end.

This has been in use for a while now in the line tool, but we just got time to add control points for the new selection tool. This allows many new cool shapes that were not possible before, like a simple bar that has been twisted:

In general the free-form deformation can produce pretty much anything you want. You can see other applications in this video:

The same operations can be performed over copy/pasted content and even the output of procedural grammars. This is already my favorite tool, if you are into twisting and bending I'm sure you will love it too.

In order to fix that, we chose to tackle on a bigger feature that had the line tool as a special case. This is usually a bad engineering practice, you just don't make a problem bigger to take care of a smaller one, but it turned out right. The line tool with disjoint ends was a special case of a mesh under a free-form deformation volume (FFD), so we did just that.

As you can see in the previous image, the sides of the line volume gradually curve from the start position to the end.

This has been in use for a while now in the line tool, but we just got time to add control points for the new selection tool. This allows many new cool shapes that were not possible before, like a simple bar that has been twisted:

In general the free-form deformation can produce pretty much anything you want. You can see other applications in this video:

The same operations can be performed over copy/pasted content and even the output of procedural grammars. This is already my favorite tool, if you are into twisting and bending I'm sure you will love it too.

Subscribe to:

Posts (Atom)